TECHNICAL REPORT

| Grantee |

University of Auckland

|

| Project Title | Field-ready network-coded tunnels for satellite links |

| Amount Awarded | USD 85,618 |

| Dates covered by this report: | 2021-12-22 to 2024-06-30 |

| Economies where project was implemented | Kiribati, New Zealand |

| Project leader name |

Ulrich Speidel

|

| Project Team |

Sathiamoorthy Manoharan

Wayne Reiher

Lucas Charles Betts

Staff of the Ministry of Information, Communication and Transport of Kiribati

|

| Partner organization | Ministry of Information, Communication and Transport (Kiribati) |

Project Summary

The Internet's staple Transmission Control Protocol (TCP) is well known to struggle over shared long latency bottleneck links such as satellite links. Such links are an expensive resource, and TCP often leaves them underused. Titrated coded tunnels can assist TCP to perform better in such scenarios. The initial aim of the project was to prove the practical usability of such a titrated coded tunnel on a geostationary satellite link to a community WiFi hub at a school on remote Chatham Island, New Zealand along with two reference engineering links.

Changes at our project partners in 2022 necessitated a relocation of the deployment to a different site in Tarawa, Kiribati, on the basis of two side-by-side Starlink links.

Table of Contents

- Background and Justification

- Project Implementation Narrative

- Project Activities, Deliverables and Indicators

- Project Review and Assessment

- Diversity and Inclusion

- Project Communication

- Project Sustainability

- Project Management

- Project Recommendations and Use of Findings

- Bibliography

Background and Justification

Satellite links to remote communities: Often the only choice, but it's just not like fibre!

Many remote communities currently rely on satellite links for Internet connectivity. This ranges from many dozens of Pacific islands and coastal and inland communities in Papua New Guinea to remote mining camps in Australia, ships at sea and aircraft in flight. Such communities are confined to share whatever amount of bottleneck satellite bandwidth they can afford as long as the up-front cost of cable is prohibitive, especially as the ongoing cost of satellite connectivity in terms of bandwidth remains very high compared to fibre. The smaller, poorer, and more remote the community is, the larger the economic burden of connectivity.

Such links will offer rates of at most a few hundreds of megabits per second (Mb/s), but in practice, many links have rates in the single or low double digit Mb/s. While higher rate links are in principle available, the cost associated with them often rivals that of a permanent fibre connection, so larger and/or richer communities that could afford them generally opt for fibre instead.

To add insult to injury, many Internet service providers (ISPs) catering for communities on satellite currently find their links underutilised, even during peak periods. Sometimes, this leads to blame being traded between ISPs and end users. This effect is, however, not the ISP's fault: It is caused by TCP, the Internet's mainstay transport layer protocol.

Why TCP does not like satellite links: TCP queue oscillation

TCP senders try to gauge the amount of available bottleneck bandwidth to adjust the amount of data they will send before waiting for a receipt acknowledgment (ACK) from the remote receiver. This amount is known as the TCP congestion window or cwnd for short, which each sender maintains for each connection. Unfortunately, the long signal round-trip time (RTT) involved in satellite links (upwards of 500 milliseconds (ms) for geostationary (GEO) and upwards of 120 ms for medium-earth orbit (MEO) satellites) means that arriving ACKs can only acknowledge that bandwidth was available at the satellite link bottleneck about half a second to a second ago. Unfortunately, this doesn't mean that this capacity is still available.

For satellite links, this is a serious problem as they are bandwidth bottlenecks: Deployed bandwidths range from single digit figures to hundreds of megabits per second (Mb/s), whereas those of the feeder networks usually reach gigabit rates (measured in Gb/s). When TCP senders overestimate bandwidth, i.e., have an excessively large cwnd, they send too much. This leads to traffic banking up at the input queue to the satellite link bottleneck. The result: Packets are delayed across the link or even dropped when the input queue overflows.

This in turn leads to delayed or missing ACKs, which causes the TCP senders to back off, i.e., reduce the congestion window and thus send less data or stop sending altogether for a while. As this happens at more or less the same time for all senders that are awaiting ACKs, these senders back off collectively, in turn starving the link input queue that just overflowed.

The link input queue then drains, leaving the link idle. This, rather than lack of demand, causes the residual underutilisation seen on many satellite links. Thereafter, data packets elicit ACKs again, enticing TCP senders to increase their congestion window, and the cycle repeats. This effect is known as TCP queue oscillation or global synchronisation. In practice, it can prevent TCP from accessing up to half of the theoretically available capacity.

Classic remedies - more buffer and performance-enhancing proxies - are not ideal

The classical approaches to tackling TCP queue oscillation on satellite links have been three-fold:

1) The first approach consists of adding buffer memory to the input of the satellite link. This allows the input queue to grow longer before it drops packets. Unfortunately, it also means that the queue tends to stay long (standing queue), increasing the RTT for all senders, which in turn slows down the congestion window growth for new connections. This approach is now widely seen as problematic as it contributes to bufferbloat.

2) The second approach is to use a connection-breaking performance enhancing proxy (PEPs). This type of PEP pretends to be the intended server when a client attempts to connect. It accepts the connection attempt on behalf of the server and then establishes a separate connection to the server instead, pretending to be the client. This reduces RTT on each of these connections compared to a direct connection between client and server, allowing for a faster opening on the cwnd. An open source version (PEPsal) is available. Our experiments show that PEPsal can indeed successfully accelerate individual TCP connections over a 64 Mb/s GEO satellite link by a factor of ~2-3 and increase overall goodput by about 20% at load levels where one would typically observe TCP queue oscillation, but yields overall performance losses on 16 Mb/s links.

3) The third approach are another type of PEP, the so-called ACK spoofers, where the PEP acknowledges TCP packets on behalf of the other side, and caches them until an ACK from the actual intended receiver arrives. This includes the ability to retransmit packets for which no ACKs have been seen. There are currently no practically usable ACK spoofers available in open source versions or as affordable standalone devices without satellite links, however one would expect performance comparable to connection-breaking PEPs.

Both connection-breaking PEPs and ACK spoofers violate the end-to-end principle of the Internet, meaning that they can break protocols. There is also the unavoidable ambiguity if a PEP has received and acknowledged data that it subsequently cannot deliver to the far end. In this case, there is a discrepancy between the state of the two TCP end hosts, one of which is left with the impression that its data has been received, whereas the other has never received it.

Network-coded tunnels boost goodput

The basic idea behind the titrated network-coded tunnels is this: What if we could make the spare capacity created by TCP back-offs available to transmit some of the packets that were dropped at the input queue to the link? This would reduce the number of missing ACKs, allowing senders to back off less severely and resume transmission more quickly. The challenge: How do we know which packets are getting dropped and what we would need to retransmit?

The good news is that with network coding, we don't need to know any of this. So how does it work? It's actually quite simple: Packets are just sets of bytes, and bytes are binary numbers. So say we have three Internet protocol (IP) packets (numbers) x, y, and z that we want to send to a receiver. We can send x, y and z as is, but if we have a satellite link between us and the receiver, and the satellite link gets to choose which packets to drop, we cannot be sure which of x, y, and z will arrive.

Alternatively, we can do a bit of mathematics. Using a pseudo-random generator, we can generate random coefficients c11, c12, c13, c21, c22, c23, ... and so on, and compute a few linear combinations r1, r2, ... as follows to generate a system of linear equations at an encoder before our three packets get to the satellite link:

- c11* x + c12* y + c13* z = r1

- c21* x + c22* y + c23* z = r2

- c31* x + c32* y + c33* z = r3

You, the astute reader, may remember systems of linear equations from high school maths, and you may also remember that such a system can generally be solved if the number of equations equals the number of variables. Here, we have three variables, and three equations, so the system is probably solvable. Forming this system is the network coding step, but we're not ready to solve anything quite yet.

Having generated the system of linear equations, we now drop x, y and z entirely at our encoder - these packets will never make it onto the satellite link. Instead, we send r1, r2, and r3 to a decoder on the far side of the satellite link as coded packets in the payload of a user datagram protocol (UDP) packet. We also get the decoder to use the same pseudo-random generator to generate the same coefficients.

Then the decoder can recreate and solve the system of linear equations above to recover x, y, and z. Again, you may remember this from high school maths, except that here, the decoder is a computer and rather good at working this sort of problem out. It can then send x, y, and z onto their onward journey to the actual receiver. Plus, there's a bit of clever cheating that the computer gets to do, which they probably didn't tell you about in school.

So far, what we have done is replaced the transmission of packets x, y and z by the transmission of packets r1, r2, and r3, and we can still get the x, y and z back at the far end. But that only works if none of r1, r2, and r3 gets dropped anywhere (and if the three equations are linearly independent, but that's highly probable).

To guard against the danger of one of r1, r2, or r3 getting dropped, we can compute yet another linear combination at the encoder:

- c41* x + c42* y + c43* z = r4

If we also send that r4, then the link can drop any one of r1, r2, r3 or r4 and the decoder can still work out x, y and z.

Even better: To add flexibility, we can use more than three original packets in each combination and generate the corresponding number of linear combinations. We can also generate more extra linear combinations, which allows more coded packets with linear combinations inside to be dropped without the original packets becoming unavailable to the receiver.

This prevents TCP senders from backing off quite as severely as they do without coding, and allows them to recover more quickly from back-offs. As such, the network codes in use here act like a forward error correcting code (FEC), or more specifically as an erasure-correcting code.

You may be aware that forward error correcting codes and even erasure correcting codes have a long tradition in satellite communication, however their conventional use is between satellite modems only. This means that they currently do not protect the part of the link where the packet loss occurs, i.e., the input queue, which sits in front of the first modem the packets get to. The coded tunnels we use run across the entire link, modems and their input queues included.

Using such coded tunnels generally increases goodput across links and can also replace existing erasure-correcting FEC on the link. In configuring coded tunnels, the original packets that contribute to each linear combination are referred to as a generation, their number is known as the generation size and the number of additional coded packets sent as the redundancy.

The underlying coding module of our solution was developed by Steinwurf ApS [1] of Aalborg, Denmark prior to us embarking on our work with satellite links. Under a previous ISIF Asia-funded project, Steinwurf used this module as the basis of a coded tunnel module custom-made to our specifications and subsequently added a number of features at our request. The module has so far performed well but has yet to be subjected to an extended time burn-and-soak test under load in our simulator.

Titration keeps surplus coded packets off the link

Unfortunately, most additional coded packets in a coded tunnel will not get dropped by the input queue, so they take up capacity on the satellite link. This can lead to drops of subsequent packets at the input queue when such surplus packets occupy queue capacity ahead of them. So this is not ideal.

The fact that the point of loss (the input queue to the satellite modem) is before the actual bandwidth bottleneck gives us an opportunity to intervene, however, and stop the surplus coded packets from reaching the actual link.

This is where our titrator software comes in. Developed under our 2019 ISIF grant, the titrator sits topologically between the encoder and the actual satellite link input queue (see diagram below). The encoder sends coded packet that belongs to the coded version of a generation to one UDP port on the decoder, and the additional coded packets to another UDP port. Each coded packet also includes a small header that indicates which generation the coded packet is associated with, and which equation within the system the coded packet represents (so the decoder can supply the right coefficients).

The titrator implements a shock absorber queue with just slightly less capacity than the original satellite link input queue. Based on their destination UDP port, the titrator directs all incoming coded packets that belong to the coded version of each generation to this shock absorber queue. The additional coded packets are put into the titrator's second queue, the redundancy queue, along with a timestamp that records when they were enqueued. Finally, the titrator uses a token bucket filter preceded by a short residual queue that complements the shock absorber queue and dequeues from the latter with first priority. The token bucket filter then sends packets to the satellite link at the link's nominal rate. This prevents any queue build-up (let alone drops) at the actual satellite link input queue. Instead, this now happens at the tail of the combined residual and shock absorber queue.

As it forwards packets to the satellite link from these queues, the titrator keeps stock of the number of coded packets it has seen for each generation.

Only when the shock absorber queue drains completely does the residual queue de-queue from the redundancy queue. In doing so, it discards any packets that are either surplus because the titrator has already forwarded enough packets for the respective generation, or packets that have been in the redundancy queue for so long that TCP would have already sent a retransmission. This all but ensures that additional coded packets can only reach the link if they are still needed at the decoder.

With a titrator, TCP can therefore utilise almost all of the available link capacity, and our simulations show that it achieves this at load levels commonly observed with oscillating queues in the field.

Genesis of the project

At the University of Auckland, we first looked into network coding across satellite links into the Cook Islands, Niue and Tuvalu during a 2015 experimental deployment on behalf of PICISOC under an ISIF Asia grant.

At the time, we did not have access to the off-island endpoints of the respective satellite links, which meant that we had to place the world-side encoder in a location (the University of Auckland) where we were unable to capture and code all the TCP traffic to the island. We were therefore only able to code TCP traffic originating from a specific experimental subnet at the University of Auckland, with the other endpoint terminating in a dedicated subnet on the respective island.

Even this very limited investigation proved extremely promising, however, with extreme queue oscillation being seen on both GEO and MEO links, and significant goodput gains being observed for large TCP transfers with coding. During our deployment visit to Rarotonga, we managed to transfer a 770 MB Debian CD image from Auckland in just over 110 seconds using a tunnel over a 160 Mb/s inbound MEO link that had previously rarely achieved more than 60% link utilisation. Such transfer rates were unheard of in Rarotonga at the time, something we could witness first hand, of course: Downloading a 200 kB e-mail attachment via the local hotel WiFi at night would take over a minute.

What we did not know at the time was whether such goodput gains (or any gains) could be sustained if we coded all traffic on a link, and disconnecting an island and re-routing all of its traffic via an experimental device was not an option. In order to prove that coding could also work in such a scenario, we built a hardware-based simulator capable of simulating traffic to and from islands at the University, again supported by an ISIF Asia grant.

We then quickly learned that the timing of the additional coded packets in each generation mattered: Having them smash at gigabit rates into an already overflowing satellite link input queue meant losing most of these packets as well, just when they were most needed. So, with ISIF Asia's continued generous support, we experimented with various delays under yet another grant until we finally found the perfect solution in the form of the titrator. Along the way, we also learned a lot about simulating satellite links.

This meant that we were now ready to go back into the field with this technology.

Project Implementation Narrative

Integration and endurance testing for titrator and network coder

Why titrate?

The solution developed under our previous ISIF grant augments the network coder's encoding path by a titrator device. The network coder on its own takes a set of n incoming IP packets and turns them into a set of n+m coded packets, such that any n out of the n+m coded packets contain enough information to reconstruct the original n IP packets. This allows up to m coded packets to be lost enroute to the decoder, without there actually being any packet loss for TCP senders that transmit across the coded path. In the case of narrowband satellite links, packets typically get lost due to tail drops at the input queue to the satellite link, i.e., they never make it out of the uplinking satellite ground station.

The Steinwurf kernel module that we use for network encoding and decoding runs on Ubuntu 18.04. Using "systematic encoding", it encodes each of the incoming n IP packets into a coded packet of its own and, once all n packets have been seen by the encoder, sends the additional m coded packets in one go. With satellite link rates in the order of dozens to maybe low hundreds of Mb/s, TCP senders adapt vaguely to the link rate and the n incoming packets arrive at roughly that average effective rate, and are similarly sent towards the satellite link at that rate as well. Not so in case of the additional m packets: These get transmitted immediately, nose-to-tail, at the gigabit rate that the coding machine's satellite-link facing interface is capable of. If the input queue at the satellite link is already overflowing from TCP having overestimated the bottleneck capacity, the additional m packets find an already overflowing queue on arrival at the link and get discarded. If the queue is not overflowing, the m packets get to enter the link and take up capacity there that could be put to better use.

The titrator brings the satellite link input queue forward and manages it itself, ensuring (a) that only enough of the m packets get to enter the link as are needed to compensate for packet drops among the first n packets, and (b) that the satellite link itself is fed at exactly the link rate.

Integration

Our laboratory setup used Steinwurf's kernel module on one machine and our titrator software on the next machine along the forwarding chain, with a network test access point (NTAP) between the two that helped us in the diagnosis of problems during development. For a practical deployment, there is no need for separate devices, however, and so our first goal was to integrate both functionalities on a single machine.

This proved somewhat harder than originally envisaged, because the kernel module relies on IP forwarding being handled by the kernel, requiring the system configuration parameter net.ipv4.ip_forward=1, whereas the titrator operates in user space and requires net.ipv4.ip_forward=0.

We thus opted to configure a physical machine with two internal virtual machines (VM) able to communicate with each other without the host operating system getting involved in traffic handling. One of these houses the Steinwurf kernel module and the other houses the titrator. The solution required not only a significant amount of virtual network configuration but also a major rework of the control script system that we use to start and stop the kernel module and the titrator.

Integration was further complicated by an as-yet unsolved issue with the latest version of the kernel module. While this did not turn into a showstopper - we were simply able to use the previous version, which works well - it did cost a number of weeks to investigate.

Integration was initially completed in May 2022, and testing of the integrated solution began.

Testing of the integrated solution

As the basic functionality of the components had already been established in our 2021 experiments, the main focus of the testing was on long term stability and load. We started with a few smaller tests taking hours or days, in part because we were notified of planned power outages at the time and could not have run a longer test at the time. This finally became possible in late June, and the solution successfully completed a three week endurance run on a simulated 160 Mb/s medium earth orbit satellite link under high load - a much more demanding scenario than the geostationary Chatham Island deployments in the latter part of this project.

Re-Integration and re-testing

In October 2022, Wayne Reiher started as a PhD student in the group and started to familiarise himself with the environment. Tasked with setting it up on a small development testbed, he suggested using Docker containers rather than fully-fledged virtual machines in order to run the coder and titrator. Although there were initially some doubts about whether this was even feasible, he has since demonstrated that it is workable. Testing of the container-based integrated solution showed however that this would either leave us stuck with Ubuntu 18.04 as the main Docker host platform (without much support horizon left) or would necessitate a move to Rocky Linux. Eventually, our move to Starlink removed the need for an integrated solution - see below for details.

Engagement with Gravity Internet

In late 2022, Gravity Internet received an earlier-than-planned end-of-service notice for the Optus satellite underpinning the geostationary satellite link to Te One School we intended to use for the project. In combination with personnel changes at both Gravity Internet and Te One School in late 2022 and 2023, this led to the dismantling of the link before we were able to deploy the project. This required reconsideration of deployment site and partners. One of the original reasons for going to the Chatham Islands rather than the Pacific Islands had been New Zealand's Covid-19 border closures, which were now history.

Starlink in the Chatham Islands

Meanwhile, SpaceX's Starlink had started offering connectivity to the Chatham Islands, and first anecdotal feedback from island users indicated that it worked well for them. We also had the opportunity to acquire a Starlink unit for our lab in late 2022, and have since had ample opportunity to gain experience with and measurement data from it in different locations in the New Zealand North Island as well as during Cyclone Gabrielle (where the principal investigator provided media comment on a number of occasions). We also gained a lot of experience around networking with Starlink, and were impressed by the ease of setup.

One of the insights from this work was that available Starlink bandwidth varies widely and undergoes step changes during satellite handovers. Packet loss is reasonably common, and round-trip times can take anywhere between 16 ms and several hundred ms. This in its own right presents interesting territory for coded tunnels, but also meant that we would have to forego titration, as we would never know what the available bandwidth on the link would be.

Pivoting the project...

We consulted with the new Te One School principal, who told us about plans by the school's "educational" networking services provider N4L to install a Starlink for connectivity at the school. The "educational" connection at the School was always going to be separate from the community hub that Gravity built: NZ's Ministry of Education has specific requirements in terms of accessibility of content and firewalling, as well as in terms of access to the network itself. N4L ("networks for learning"), however, is a crown-owned company tasked with provisioning networks to NZ schools, and as such were an obvious cooperation partner for us in a remote location such as the Chatham Islands. We reached agreement with N4L in May 2023 to coordinate our respective deployments, aiming at a deployment date in late August / early September 2023.

...and pivoting again

On June 14, the principal contacted us asking "Our auditors are questioning the funding relating to Gravity. Can you please confirm - in plain language - what the current situation is and how this relates to the $10k grant from July 2021." We answered promptly, explaining that the Internet NZ grant was entirely independent of our ISIF project and that we had no insight into the Internet NZ grant agreement nor were we privy to the grant amount or any of the financial or reporting arrangements surrounding that grant. We also reiterated our view of the current state of the (ISIF-funded) project and that we did not expect any co-funding from the school.

We thus ordered two Starlink units for side-by-side use in the project. These subsequently arrived and were subjected to the electrical safety testing required of any university-owned powered devices. We also set up network coding servers for these, including one in combination with our network lab's existing Starlink unit at the principal investigator's home as a proper "remote island" unit. In addition, we applied to the university's Digital Services to have the requisite external network ports opened, so the servers on the remote units could connect to the servers in our lab.

Meanwhile, we tried to discuss the details of our deployment with the school. Unfortunately, we did not get any meaningful reply. We made three further attempts to obtain confirmation by e-mail that they were still interested in proceeding with the project, and a phone call to the school office administrator preceding one of these e-mails, in which we were promised a prompt response. We never got a reply. It therefore became increasingly clear that we could not count on getting timely responses to our queries from the school.

In the face of a progressively closing time window for the project, we looked at potential alternative sites. As our original hope for this work had been to improve connectivity in the Pacific Islands, which we could not access during the pandemic, Pacific sites were obvious candidates. Moreover, having pivoted to Starlink, it was desirable to go to a site where Starlink connectivity was being provided via inter-satellite links. In April, we had become aware that Starlink roaming service was now available in Kiribati, and initial tests with the first Starlink user there showed that traffic from and to Tarawa Atoll in Kiribati was routed via Auckland. Project team member Wayne Reiher (on leave from his position as National Director of ICT of Kiribati to pursue his PhD in our group) quickly identified three possible deployment sites: the main hospital in Tarawa (operated under the Ministry of Health and at present the likely deployment site) and as an alternate the Kiribati Ministry of Finance, and his own Ministry of Information, Communication and Transport (as a backstop).

We thus chose to pivot to Kiribati instead, with the hospital initially as our preferred candidate as it had 24/7 power. Alas, 24/7 power was soon thereafter restored to all potential sites. To avoid further complications with multiple parties, we then opted for deployment at the Ministry of Information, Communication and Transport, with a view to providing public WiFi in the vicinity of two sites close to the ferry boats in the port at Betio on Tarawa.

Formalities

One of the consequences of pivoting to Starlink was that we would not have access to the Internet side of the satellite link. Rather, we would have to terminate our coded tunnel at a site that we had physical access to and control over. The obvious choice was our lab, but bringing traffic in from outside the university through a tunnel and then releasing it inside the university's network literally opened a can of worms. What we had hoped would be a simple request to open a few network ports for us turned into a protracted negotiation lasting almost half a year. Our university's IT services required us to apply for an authority to operate (ATO).

This required a security scan of our lab servers (easy pass), a privacy assessment, a "product owner" and a "product manager", and when we'd just been told that we were 90% there, it transpired that someone thought that we were now an ISP and needed to demonstrate all sorts of compliance from Commerce Commission auditing of our non-existent pricing to ensuring that we offered a fair deal to competitors wishing to peer with us! Plus we had to ensure that our system was designed in such a way that non-university traffic could not land inside the university network or be observed by anyone while in transit here. By then, the number of people involved in processing our application (or, rather, our product owner's application - and the product owner kept changing by the week) had increased to around a dozen, and there were times when we thought we might not get this off the ground after all.

We persisted, however, and in November 2023 were granted the ATO. This necessitated a small redesign, however.

A design tweak

The conditions for getting our ATO meant that our lab-based servers could now not be the points where plain unencrypted end user traffic from Kiribati entered or arrived from the Internet. This required a point outside the university's network.to send and receive such traffic. We were lucky to find such a point in the form of a Nectar cloud instance located at, but not inside the university's network. Initial tests showed that it would be able to handle the load expected.

We therefore decided to send all user traffic from Kiribati to the Nectar server via an encrypted UDP-based Wireguard tunnel. In unencoded operation (see "Uncoded operation via branch 1.png"), neither the user traffic itself nor the UDP packets carrying them through the tunnel would ever be seen inside the university's internal network. In coded operation (see "Coded operation via branch 1.png"), the UDP packets of the Wireguard tunnel are now routed through our coded tunnel between the Kiribati endpoints and the servers in our lab, where they emerge into the university's internal network. Note however that the destination for all of these UDP packets is still the Nectar server, and that it is impossible to decrypt the UDP payload inside the university network - this still happens on the Nectar server. Similarly, Internet traffic entering the Wireguard tunnel at the Nectar server gets routed to the other Wireguard tunnel end in Kiribati via our lab servers in encrypted form. "Coded operation via branch 1.png" shows a coded tunnel using the first of the two Starlink units, we can similarly use the second coding server and second Starlink unit and the slc-akl1 coding server in our lab instead.

In layperson's terms, one can think of the Wireguard tunnel as a flexible rubber hose that guards end user traffic from prying eyes and that always starts in Tarawa and terminates at the Nectar server. When uncoded, the hose runs outside the university network, when coded, the coded tunnel pulls the mid-section of the hose into the university and lets the hose emerge from the coded tunnel there to continue on to the Nectar server.

... and a small tweak to the tunnel software

Using Starlink as a provider meant operating with a carrier grade network address translator (CGNAT) in the path of the tunnel between Auckland and Kiribati. The original Steinwurf tunnel software assumed public addresses at both endpoints, so we had to make a small modification that allowed us to use the Auckland end as a "server" endpoint and the Kiribati end on the private IP address behind the CGNAT as a "client" endpoint.

Designing for robust operation

Not having a local installer or dedicated technical person to work with at the Tarawa end, we also needed to ensure that we had sufficient redundancy in the system to allow remote maintenance and control. This is being achieved by having essentially two entirely independent coding servers with their own UPS and Starlink satellite terminal. Each of these can act as a coded tunnel endpoint, with the other being available for command and control of the server doing the coding. Each of the systems is connected:

- Directly to the other system. This allows a direct SSH connection between the two servers for remote maintenance.

- To a switch which also connects to the WiFi router. This acts as a backup for the direct connection above.

- To the BMC (board management controller) of the other machine for use with an IP management interface (IPMI) client. This allows remote re-boots, for example, if one of the servers becomes unresponsive.

- Via USB cable to its UPS, which enables orderly shutdowns in the case of power outages.

Integration and testing

One of the advantages of using a Starlink roaming subscription was that we could integrate and test the entire setup in Auckland, with the principal investigator's deck and sunroom serving as antenna sites and "Kiribati side" server room, respectively (see "Testing the setup in the sunroom.jpg" and "Testing the two Starlink Dishys on the deck.jpg"). This was completed successfully in early December, with the exception of a separate local add-on WiFi network that was added on site in Kiribati.

Testing included setting up the systems for power outages and then literally pulling the plug on the entire assembly as we knew that power in Tarawa could be unreliable.

Testing was completed successfully, having thrown up only a small number of issues:

- Running the coding servers on the Tarawa side in coded mode enslaves the interface facing the Starlink unit, preventing traffic not intended for the coded tunnel (such as local DNS or NTP as well as control traffic) from flowing. So we needed a separate physical interface to talk to Dishy. We achieved this by adding a USB hub with a WiFi dongle to each server - in an ideal world we should have had an extra Ethernet interface on both of the servers along with a switch between server and Dishy to accommodate. Little did we know at the time that this would result in a three month wild goose chase with SpaceX - see below.

- One the of the aforementioned USB hubs proved to be a little flaky.

The primary investigator would like to acknowledge his wife's patience in putting up with the two noisy servers in close proximity to her work area for almost two weeks.

Shipping

This deserves its own section - both because of its budget footprint and because of the lessons learned. We initially shipped a total of 8 boxes with a combined weight of just under 100 kg to Tarawa by air. This included the two uninterruptible power supplies. As these contain lead acid batteries, we had to supply a current material safety data sheet for them and also authorise DHL to add these to the airway bill. The latter was something nobody told us about until the primary investigator had to deal with it camped on a beach during his pre-Christmas holidays!

We were also quoted an ETA for the boxes early in January 2024, corresponding to three weeks of transit time. Due to extra airline capacity being added into Tarawa during December, the entire shipment arrived in Tarawa after a few days, before Christmas. Wayne Reiher flew up before Christmas, prepared the site, and kindly negotiated a customs waiver for our equipment with the Ministry. He then retrieved the equipment from DHL in Tarawa on the first business day of 2024 to start local implementation.

Implementation in Tarawa

Wayne installed the equipment in Tarawa with kind support from the Ministry's staff (one of the images for this section shows Tooti and Kataua hard at work). The first couple of weeks were marred by bad weather - almost continuous rain after years of drought - it was simply not safe to venture onto roofs for Starlink antenna installation.

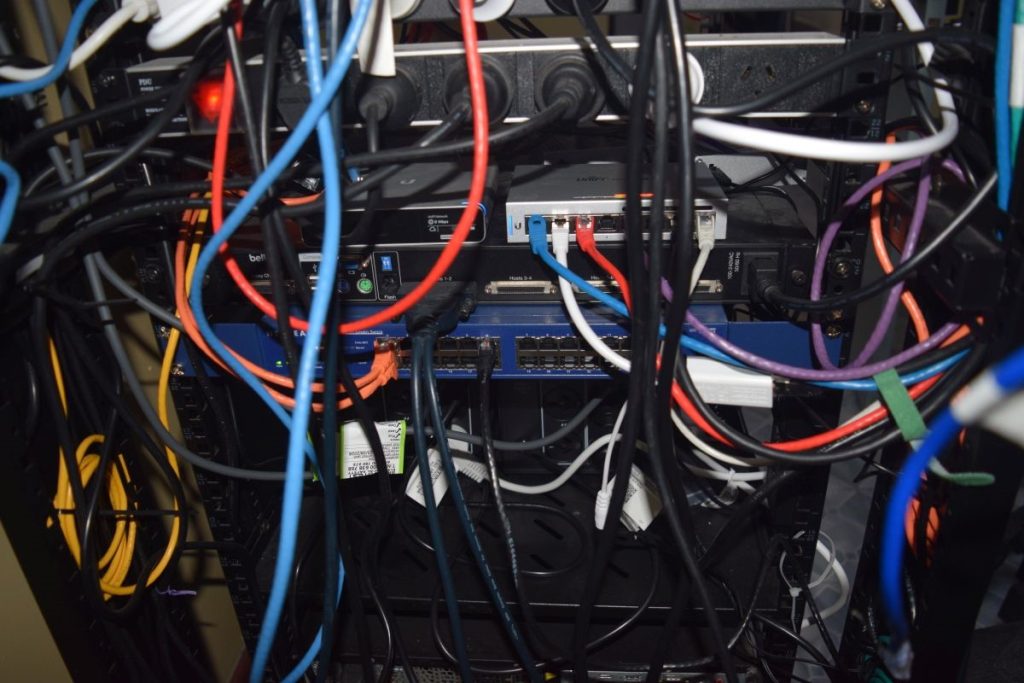

The work thus initially concentrated on installing the servers and UPSs into a rack at the Ministry (as shown in the attached image showing the equipment in its new home in the Ministry's rack), as well as setting up the componentry for the add-on WiFi network in preparation for their installation. A small break in the weather permitted installation of the first Starlink unit and connection to the local part of the add-on WiFi network. Wayne also brought the Ministry on board by connecting their network to the system, ensuring a larger user base.

Even this small network - as yet without coding turned on - proved very popular with dozens of users. Most of these were Ministry staff or visitors, as well as some youth in the vicinity. Wayne reported that the Ministry's front desk was giving out the login details to our network rather than to their own, and our WiFi router showed dozens of clients using the system.

Eventually, the weather improved to the point where Wayne was able to install both Starlink units along with the full add-on WiFi network, which now consists of four access points across two sites.

The two units are now located on different parts of the Ministry complex's roof, serving three Unifi WiFi access points in the complex and a further access point on a mast at MICT premises in the Kiribati National Shipping Line (KNSL) building across the road, which is connected via a wireless link.

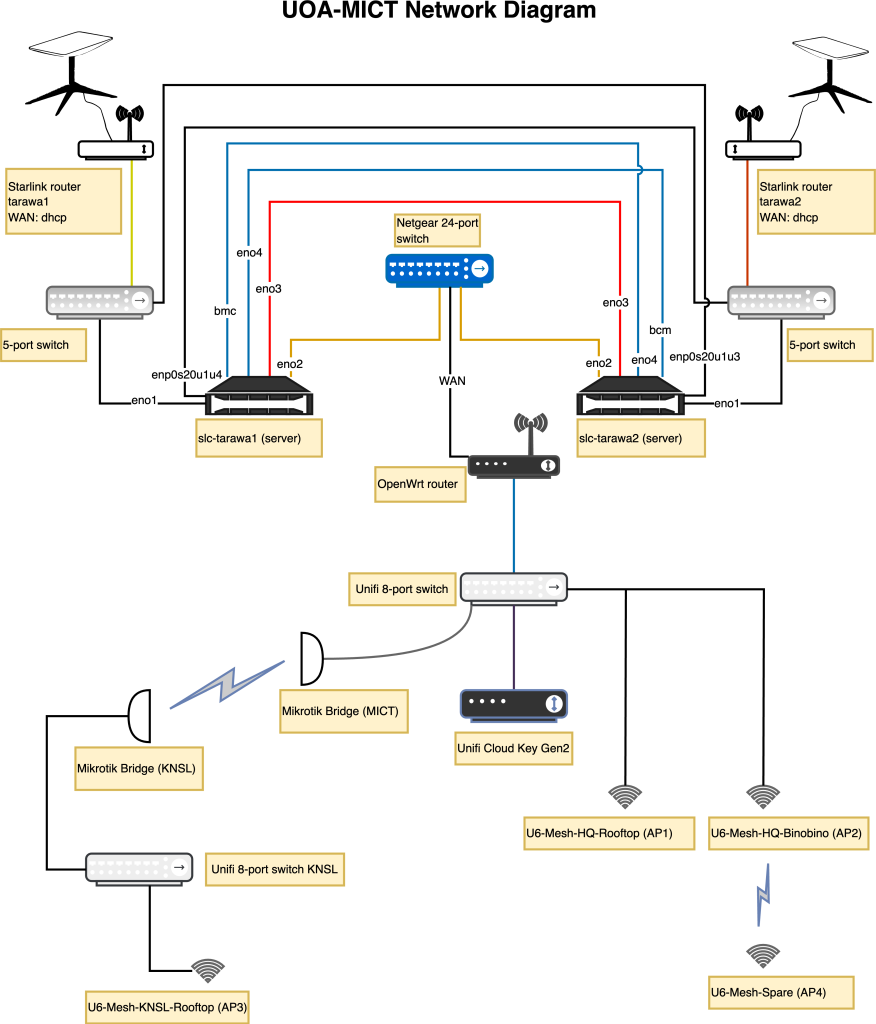

Figure 1 shows the final deployment configuration topology in Tarawa.

Power matters

One challenge in troubleshooting the system as deployed were the frequent power outages in the Betio area of Tarawa. These have been a long-standing problem both due to lack of generating capacity (and difficulties in getting this addressed during Covid-19) as well as overloaded feeder cabling between the islands of the atoll. Between commissioning in January 2024 and late June, we counted nearly 20 outages, with a couple lasting over a day.

Our systems are set up to e-mail us whenever our uninterruptible power supplies (UPS) operate on battery and initiate system shutdowns. So far, this has worked well and the UPS have protected our equipment perfectly. The same could sadly not be said for some of the equipment run by the Ministry, which was damaged during one of the outages, meaning that we were able to assist with connectivity for some of their services until alternative arrangements could be made.

We were therefore thrilled to hear that the Ministry had signed a contract to have a large solar photovoltaic system with batteries installed. This installation was planned for June 2024, but at the time of writing, we had not heard that it had been completed. We are hopeful that this will be operational by the time we will visit again in September, however. We will also take and install additional surge protectors for our equipment.

Gremlins!

As he was adding the various tunnel functionalities, Wayne noticed that the central WiFi router was exhibiting mysterious issues that had not appeared during testing. Not all of these seemed to be able to be resolved by configuration changes. E.g., the router's DHCP server would suddenly no longer issue IP addresses to clients. Wayne replaced the router by his own personal device, which seemed to solve the problem. We then sent a replacement router to Kiribati - which showed similar issues on arrival, however. Both of our routers behaved themselves much better at Wayne's residence.

We therefore decided to extend Wayne's stay on Tarawa. While this did not lead to a resolution of the router problem, we got a number of cues as to the driver behind the issues: the local power supply. During Wayne's extension time in Tarawa, several items of equipment at the Ministry were damaged by power surges, including a number of their own WiFi access points. While our equipment escaped unscathed, it became apparent that the mains power phase it was connected to was badly overloaded, causing brownouts in the neighbourhood. Our equipment would have added relatively little load on its own, compared to say, an airconditioning unit, but this still gave us a pointer as to where we might need to look.

The principal investigator remembered at this point having had issues with two routers by the same manufacturer at his home a few years earlier. One of these routers had also been brand new, and once taken back to the shop had behaved normally, just to malfunction again later at home. The difference between the two locations had been solar-supplied inverted mains at home, and national grid supplied mains at the shop. Could the power in Tarawa be the culprit?

Having a reference unit for the router available in Auckland, we noticed that it had a rather puny power supply - 12V DC at 1.5 amps. We fashioned an adapter to insert into the DC power lead between the power supply and the router, and connected it to the principal investigator's trusty 35-year old oscilloscope. It shows that the power supply exhibits a significant ripple once the router has booted, including what appears to be a high frequency component well beyond the oscilloscope's bandwidth. We have since repeated the experiment with a beefier power supply and have swapped the supply on our September 2024 visit, as well as having installed a DC power filter (and having given Wayne his router back). We have also submitted an APNIC technical blog article on the issue as we consider it likely that this problem may occur elsewhere in similar settings.

The mystery of the disappearing packets ... solved at last

Another issue that we had to deal with were periodically disappearing packets. When we turned the coded tunnel on, we would observe perfect functionality for about eight seconds, followed by no functionality for about 20 seconds, then things would work again for eight seconds, then not, and so on. Investigation quickly revealed that the lost packets would leave the encoder in Auckland but would not arrive at the decoder in Kiribati during these 20 second intervals. We also observed that the problem did not occur if we directed the coded tunnel from the Auckland side at test endpoints that did not involve Starlink. Curiously, neither did it occur if we swapped the coded tunnel against a UDP-based Wireguard VPN tunnel using the same ports.

Our first suspicion was therefore that the packets were being dropped by a deep packet inspection firewall somewhere, even though the pattern of drops seemed rather weird. We got in touch with a contact in SpaceX network engineering who kindly offered to help, but took some time to understand the problem. Eventually, in mid-June, SpaceX were able to confirm that our packets were all hitting their network, and - crucially if somewhat surprising to us - were all arriving at the Dishy connected to our server.

So why didn't we see these packets? SpaceX asked us to ensure that the link was only carrying the coded tunnel traffic, so we deactivated the aforementioned WiFi dongle - but not before checking that it wasn't carrying any essential traffic that we had forgotten about. We did so with tcpdump - just to discover that all our lost packets were happily arriving at the WiFi dongle rather than the Ethernet interface!

This is what happened: The first ingredient in the problem was that on the coding server in Tarawa, the kernel module doing the coding uses a pseudo-interface that is set as the "master" of the Ethernet interface, so the decoder for the tunnel was only reachable via that interface. The second component was the fact that the Starlink router outputs packets received from the satellite link to both its WiFi interface and to the Ethernet adapter. With the server's Ethernet and WiFi interfaces both on the same network, and their IP addresses in Linux being owned by the kernel rather than the interface itself, whichever interface got to see the incoming packets first also got to handle them. This meant that packets for the decoder were dropped unhandled if first seen on the WiFi interface. The Wireguard tunnel on the other hand listened on its port without being bound to a particular interface - so Wireguard saw these packets, the kernel module did not. Lesson learned!

The workaround was unfortunately not ideal. At the end of the reporting period, we were using the second server (that connects to the other Starlink unit in Kiribati) as the default route for the first server, NAT-ing the packets on this route, thereby giving the first server's kernel module and its Ethernet interface a monopoly on the Starlink unit carrying the tunnel, with the WiFi interface on the first server disabled.

We have since changed the network of one of the Starlink units (this is now possible) and have cross-connected the two servers and Starlink units by means of two small switches. This now allows one external physical interface on each to be used for default route through one Starlink unit, and another for the route to the remote encoder/decoder through the other unit.

Measurements

The number of distinct clients seen on our WiFi network around the Ministry complex exceeded 500 per day within days of commissioning, showing that the free WiFi service had been noticed and was being used! In June, we saw over 3000 unique devices connect to the network. This was probably to no small part due to Ministry staff adopting it themselves for their own personal devices and alerting visitors to the Ministry to the network's existence. Yet, this alone does not explain the numbers seen, so it is evident that word must have spread in the wider community, too.

Due to the late resolution of the problems with the coded tunnel, we were unable to conduct comparative measurements for a long time. These comparative measurements collect traffic goodput volume on a daily basis, with one of the Starlink/server systems in Tarawa being tunnelled and the other running "plain vanilla" Starlink, and the Wi-Fi network being switched between the two on a daily basis. They have to run for several weeks at least to yield any meaningful data as there is a lot of natural variation in the traffic.

When we then started these measurements remotely, we collected a few weeks worth of data, but could not use much of it due to persistent power outages. Moreover, on our visit in September 2024, we noticed that we were unable to log into the WiFi ourselves. To our surprise, this was a result of our WiFi network's success: All 150 DHCP IP addresses that we had provided on 3 hour leases had been taken up! We subsequently increased the WiFi network from a /24 to a /23 and are now making up to 450 addresses available at any one time. We have since seen over 200 addresses taken up simultaneously on occasion - a sign of the huge pent-up demand in Tarawa.

Unfortunately, this address pool constraint meant that any comparative throughput data collected up this point would have been unusable because there would have been an artificial cap on users and therefore user traffic. Since increasing the number of available addresses, we have resumed the comparative measurements afresh and will report on them once the results are in. At present, power outages are increasing again, however, a situation that is likely to persist at least until the Ministry's solar project is complete. The panels are being installed at the moment.

Project Activities, Deliverables and Indicators

Activities

Beginning of Project

| Activity | Description | #Months |

|---|---|---|

| Encoder/decoder & titrator integration | Integration of encoder/decoder and titrator on a single machine. Currently, these run on separate computers, however this is in principle only needed for troubleshooting and some measurements. Having everything in one box at each end of the link simplifies things. This work will be carried out in the Internet Satellite Simulator Laboratory at the University of Auckland. | 1 |

| Burn tests under load | So far, the longest that our software components (titrator and encoder/decoders) have run continuously under load is 24 hours. However, for deployment, we need to verify that they can run continuously for at least several weeks at a time. This requires designing, implementing and conducting burn tests. The tests will be carried out in the Internet Satellite Simulator Laboratory at the University of Auckland. | 3 |

| Engineering link from/to Gravity Internet | This link will allow Gravity engineers in Auckland to learn how to integrate titrated coded tunnels into their network environment, test configurations and perform troubleshooting on an actual satellite link (but without impacting on other users). This activity will involve training of Gravity engineering staff as well as actual implementation work at Gravity's premises in Newmarket, Auckland and their data centre in the Auckland CBD. The implementation will be able to start once the burn tests are complete. | 3 |

Middle of Project

| Activity | Description | #Months |

|---|---|---|

| Engineering link to Chatham Island | The purpose of this link is to establish a reference system that the local technical support worker on the island can use to troubleshoot and test. It will also allow us to test a whole encoding/decoding and titration chain end to end without disrupting island users. To deploy this link, we will need to visit the island for approximately one week. We will also need some time after this deployment to collect telemetry about diurnal / weekly performance patterns. | 3 |

| Ramp-up | With the reference satellite links available, we will be able to take our work to the next level in terms of roll-out and adoption. Writing grant applications (and training our students to do so) is a big part of this, as is industry liaison. Under the training and organisational development funding of this grant, we will engage external research advisers to assist us and our student with grant writing and introductions to industry partners as required. | 9 |

End of Project

| Activity | Description | #Months |

|---|---|---|

| Adding a titrated coded tunnel facility to the Te One School community hub | This implementation will be the main milestone of the project. It will require another visit to the island, which will also include outreach activities at the School to get the students interested in communication technology. | 3 |

Throughout the Project

| Activity | Description | #Months |

|---|---|---|

| Monitoring, evaluation and publications | This activity includes taking feedback and learning from telemetry and utilisation data collected on the engineering and hub links during the various times of the project. It will help us optimise code parameters for this and future deployments, and publish what we have learned. | 18 |

Key Deliverables

| Deliverable | Status |

|---|---|

| Titrator and network coder integration and endurance testing. To be complete by end of July 2022. | Completed |

| Implementation of engineering link within Auckland. Anticipated completion by end of October 2022. | Completed |

| Second satellite link to Tarawa (was: Engineering link on Chatham Island. To be deployed by end of January 2022). | Completed |

| System image for satellite links. Deployable prototype complete by end of August 2022. | Completed |

| Setup of coder/titrator machines for deployment. Ready two weeks before the respective deployment. | Completed |

| Coded and titrated satellite link deployed on Tarawa (was: Chatham Island). Deployed by end of April 2022. | Completed |

| University link endpoints ready | Completed |

| Procurement of two Starlink units and associated hardware to implement the Tarawa (was:Te One) access point | Completed |

| Tarawa (was: Te One School) deployment visit organised | Completed |

Key Deliverables - Detail

| Deliverable: Titrator and network coder integration and endurance testing. To be complete by end of July 2022. Status: Completed Start Date: December 6, 2021 Completion Date: September 15, 2022 Baseline: Titrator software and network coder operated on different machines. Activities: Titrator software ported to simulator's coder machines. Startup and takedown scripts adapted. Tested by running the titrator in a separate namespace on the machine. Worked but was unstable. Switched to a virtual machine (VM) to achieve better separation of the software. This seems to work - endurance tests completed (3 weeks with high load on a simulated 130 Mb/s medium earth orbit satellite link). Outcomes: Titrator software and network coder operate reliably together on a single machine. Note the titration was no longer required after pivoting to Starlink. Additional Comments: |

| Deliverable: Implementation of engineering link within Auckland. Anticipated completion by end of October 2022. Status: Completed Start Date: May 1, 2023 Completion Date: December 7, 2023 Baseline: No such link, premises are available at Gravity's data center and headquarters in Auckland. Activities: Due to Gravity no longer being involved, we could not implement this, however we are currently working on a solution that uses the existing equipment together with our lab's Starlink unit. This was deployed to the principal investigator's home in November 2023, with the other end installed in our lab. Activation required ports to be opened by the university's IT services - this did not happen until November 2023. Outcomes: Original: Engineering satellite link up and operational. Now: Engineering satellite link using Starlink up and operational. Additional Comments: |

| Deliverable: Second satellite link to Tarawa (was: Engineering link on Chatham Island. To be deployed by end of January 2022). Status: Completed Start Date: January 3, 2024 Completion Date: January 31, 2024 Baseline: No such link Activities: This was originally meant to be commissioned on our first deployment visit to Chatham Island, however this is no longer an option due to Gravity Internet and the school no longer being involved. However, due to the ease of Starlink setup, this is now intended to become part of the link setup at Tarawa in the form of a second parallel Starlink link. Outcomes: Originally: Engineering link on Chatham Island operational. Target: End of August 2022. Now: Two links operational from Tarawa. Target: November 2023. Completed end of January 2024. Additional Comments: |

| Deliverable: System image for satellite links. Deployable prototype complete by end of August 2022. Status: Completed Start Date: December 6, 2021 Completion Date: August 15, 2022 Baseline: System image for network coding machine in simulator exists. Activities: Integration with titrator, network and access configuration, adaptation of startup scripts to the actual link networks as far as possible to do this in advance. Copied to FOG server for fast deployment. Outcomes: Generic system image for deployment on actual satellite links ready. Additional Comments: |

| Deliverable: Setup of coder/titrator machines for deployment. Ready two weeks before the respective deployment. Status: Completed Start Date: May 1, 2022 Completion Date: November 15, 2023 Baseline: No machines in hand Activities: Machines ordered. Machines have been configured with the system image for satellite links. Machines have been deployed. Outcomes: Physical network encoder/decoder servers ready for deployment. Additional Comments: |

| Deliverable: Coded and titrated satellite link deployed on Tarawa (was: Chatham Island). Deployed by end of April 2022. Status: Completed Start Date: August 1, 2023 Completion Date: December 8, 2023 Baseline:No such link Activities: Planning deployment visit to Chatham Island. Due to the switch to Starlink, using the titrator does no longer make sense as Starlink does not provide a known bandwidth. Link will now terminate in our lab and will connect to the Internet there instead of at Gravity's premises. Coder/decoder machines for both ends have been set up - awaiting IP configuration for the university end. Outcomes: Was: Coded and titrated satellite link deployed and operational on Chatham Island Now: Coded Starlink satellite link deployed and operational on Tarawa, Kiribati Completed: 31 January 2024 Additional Comments: |

| Deliverable: University link endpoints ready Status: Completed Start Date: May 1, 2023 Completion Date: November 15, 2023 Baseline: No such endpoints existed. Activities: This is a new indicator post-Gravity. We have re-used the equipment we were going to place into the Gravity data centre. This has been set up with an operating system, interfaces configured and coder/decoder software installed, along with an SSH tunnel enpoint that allows the remote Starlink terminal's coder to "dial in" and make itself available for uncoded management connections from the endpoint machine. We obtained university IP addresses for the endpoints and required the opening of the required network ports by the university's network engineering team. Outcomes: Three endpoints ready for service, connectable by our Starlink units and reachable for both coded and uncoded traffic. Completed mid-November 2023. Additional Comments: Three university coding endpoints (one for local engineering link and two for links from Te One) ready for operation by middle of July 2023. |

| Deliverable: Procurement of two Starlink units and associated hardware to implement the Tarawa (was:Te One) access point Status: Completed Start Date: June 1, 2023 Completion Date: December 5, 2024 Baseline: No such equipment at hand. Activities: Orders placed and equipment received. Outcomes: Equipment and parts in hand. Target: mid-July Completed: early December 2023 (except for spares) Additional Comments: This is a new indicator (post-Gravity). As we now have to provide the satellite links, we will have to procure two Starlink units for the Te One School hub, along with uninterruptible power supplies, WiFi routers and small parts. Procurement should be complete by middle of July. |

| Deliverable: Tarawa (was: Te One School) deployment visit organised Status: Completed Start Date: October 1, 2024 Completion Date: December 11, 2023 Baseline: Nothing organised Activities: Originally: Fix dates in consultation with Te One School. Await approval of internal budget changes. Book flights and accommodation and reserve rental car. Organise air freighting of equipment / documentation required to fly the UPSs. Now: Do same for deployment location in Tarawa instead. Outcomes: Confirmed travel itinerary and air freight organised. Target: end of October 2023. Completed 11 December 2023 Additional Comments: Te One School deployment visit organised (flights, rental vehicle, accommodation) |

Project Review and Assessment

We have achieved our initial technical objectives in terms of system integration of encoder/decoder and titrator on a single physical machine and deployment of a coded tunnel on a production satellite link serving a useful purpose (community Internet) beyond coded tunnel research.

Several of our objectives had to be reformulated after our two initial partner organisations ceased communicating. The lesson learned here is that trying to piggyback on an existing project that we have no control over comes with the risk of that project not eventuating, and corresponding knock-on effects on one's own plans. We didn't see Optus D2 go as quickly as it did and didn't see Starlink coming as quickly as it did, and perhaps relied on personnel and organisational continuity among our partners and on the relationship between them a bit more than we should have. Lesson learned!

We count ourselves lucky to have had alternatives available, in the form of Starlink rather than the planned geostationary links, and in terms of being able to deploy in Kiribati. In exploiting the alternatives, we could build on new in-house competencies (Starlink) and existing relationships (in particular Wayne Reiher's contacts in Kiribati).

Seeing the project accepted so strongly by the Tarawa community was a real morale booster, especially after weeks of rain. Seeing it swamped and actually seeing the kids in the street enjoying their access after school, as well as hearing of the social impact on the seafaring community of, and visiting, Tarawa, was heartwarming.

Ironically, the final shape of the project is somewhat closer to what we would have proposed originally had the Pacific Islands been accessible at the time of application. Nevertheless, not being able to maintain and strengthen the new relationships that we had forged with Gravity and Te One School remains a disappointment to us.

Yet Tarawa turned out to be anything but a bad second choice. With over 3000 users seen, the number of users that are benefiting from the WiFi service in Tarawa is several times higher than the number we could have achieved at Te One, with fewer than 700 people on Chatham Island. Being able to add to the formal qualifications of a number of local personnel has been an additional bonus that we could not have equivalently achieved in the Chathams.

Having to also implement our own WiFi network there rather than being able to plug into partner infrastructure as anticipated for Te One resulted in a somewhat smaller network than one that we could have built with more design and ground preparation time. This presents potential for future growth and tag-ons.

The technical work on the integration has created Docker container internetworking competency in our group that we did not previously have. This is a somewhat off-label use of Docker containers: Normally, containers communicate to the outside world but not so much with each other as in this instance. The principal investigator has since worked with Chris Visser at Internet Initiative Japan on inter-container communication, however, and has become aware of the "Mini-Internet Project", which originated at ETH Zürich, which also uses such inter-container communication and which Chris is currently trying to adapt as a training tool for the APNIC Academy and similar initiatives. We will now be able to apply our new skills when attempting to disentangle our two units in Kiribati for additional robustness.

The pivot to Starlink links also allowed us to add a new research component to the project: Nobody has used network-coded tunnels via Starlink before, and it will be interesting to see what difference they make.

Using a redundant setup in Tarawa with two independent but mutually accessible servers and Starlink units was a design feature that paid off handsomely, especially when the need arose to have an alternative path to the outside world that did not depend on the coded tunnel. Similarly, the investment in the two UPS units was one we were glad to have undertaken given the number of power outages over the last nine months - and seeing what they did to other equipment on site.

An unexpected side aspect of our Tarawa visit in September was the principal investigator's interest in solar power supply and in Starlink capacity. On the former topic, it was interesting to compare notes with the Ministry staff. Despite the huge opportunity that solar power presents for Tarawa, this is not yet matched with large system solar experience on the ground. Donor-paid systems are being installed by contractors, without technical expertise being passed on to local staff - something that clearly increases project risk as staff with local knowledge remain in the dark about important aspects of a system's design, e.g., whether and how a system is connected to local power grid, and whether it will operate as primary or backup power source only.

In terms of Starlink, it was interesting to find that South Tarawa is perhaps one of the unexpected places on Earth where Starlink seems to be about to reach capacity. Moreover, as Kiribati requires Starlink owners to apply for a ground station licence, the number of units is reasonably well known, and the number of unlicensed units can be at least roughly estimated. This means that we now know that the number of terminals that Starlink can comfortably service in a single cell is at most in the low thousands.

Diversity and Inclusion

After the loss of a female PhD candidate to graduation and the loss of a female staff member in the group to another university, we have sadly not been able to recruit another female staff member or student into the group. Recruiting students generally is difficult in the current job market, and the female staff that have joined our School since the end of the pandemic work in other areas. The position of the female staff member who left our group has not yet been renewed, despite requests to this effect from our end.

We have been somewhat more successful on the LGBTIQ+ front, however that was not as a result of any specific recruitment attempt in that community. Note also that NZ privacy legislation prevents us from identifying the person involved.

Raising our Pasifika profile was an explicit goal in this project, and Wayne Reiher from Kiribati has now become a core member of the team who has already delivered a string of mission-critical contributions, including leading our pivot to Kiribati, piloting a Docker solution for the combination of coders and titrators on a single physical machine, and actually deploying the project in Kiribati. He has also been closely involved in our Starlink work to date.

Using project funding to upskill Ministry staff via CompTIA courses has also allowed us to develop local skills, something that we were told had been very welcome and useful. Being able to meet some of the team that benefited (and seeing a couple of them participate actively in the 2024 Pacific IGF and APNIC58) has been a real joy.

Our work in the Pacific space is certainly being noticed, with the primary investigator having been involved in a number of Pacific-related interdisciplinary efforts that arose out of the project's roots after the 2022 Hunga Ha'apai eruption in Tonga, where we played a central role in keeping information between the Tongan communications community and the New Zealand geophysics community flowing: The former wanted to know what had happened to their fibre optics cables and why, and the latter were interested in communications data from cable ship observations to cell phone tower outage logs. This is ongoing and is contributing to the scientific understanding of the eruption and of its aftermath: E.g., in 2023, we were able to obtain cellphone tower log data for a base station damaged by one of the tsunami that followed the eruption. The timing information contained in the log data invalidated the existing theory as to the tsunami's source, and let to a new hypothesis about an additional volcanic event further down in the volcano's caldera.

The project has also helped us to get the Pacific Islands noticed in the outside world, most noticeably during the principal researcher's sabbatical in Japan in 2024: The interest in the Internet research community is clearly there, and being able to point to actual projects on the ground is a bonus. We were also able to install two software RIPE Atlas probes on Kiribati to contribute to the global Starlink measurement effort.

Project Communication

Our communication plan was thrown into nearly complete disarray as a result of having to pivot. In terms of the scientific and technical audience, we expected to have about a year to communicate findings from our measurements - which we are only now able to start properly. Our beneficiary audience changed completely, of course.

That all said, our pivot to Starlink has also opened up opportunities. There is an international Starlink research community interested in how (well) the system functions. Much of the more technically qualified side of this community congregates on a mailing list ([email protected]) that the principal investigator has been a regular contributor to. Tarawa as a location that can at this point only use Starlink if the Starlink satellites connect to the Internet via laser inter-satellite links (ISLs), and as such, we have been able to contribute observations that were new or contradicted long-held beliefs. For example, it was originally assumed that ISLs would run along orbital planes with relatively few cross-plane links - yet we were able to observe that Tarawa traffic runs via New Zealand regardless of where in the world the other end host is.

We already have a number of other insights, e.g., that our two units in Kiribati, despite being only a couple of dozen metres apart, will generally not communicate with the same Starlink satellite. These and other observations are still largely in the pipeline for publication.

One paper we have already submitted (to ISITA2024, an information theory conference) concerns an estimate of Starlink's user downlink capacity [2]. This was motivated by the observation that the number of Starlink terminals on Tarawa was mushrooming - and we had noted last year that Starlink was using pricing and sales restrictions to manage user density.

In terms of engaging the current community of beneficiaries - the use that our WiFi network is getting on a daily basis speaks for itself. The "walled garden" page of the system acknowledges the roles of APNIC Foundation / ISIF Asia as well as of Education New Zealand (whose scholarship programme dedicated to Kiribati supports Wayne's PhD and which could do with more applicants).

At the time of writing, Wayne is attending PACNOG in Guam, where he was planning to present on the project. We had confirmation as little as two weeks ago that he would present, however this seems to have been a misunderstanding as the organisers subsequently told him that there was no presentation slot left.

We still intend to submit to the conferences we originally intended to target once we have sufficient measurement data in hand over the next few months.

We have also shared our experiences and findings to date with the APNIC community at the APNIC56 meeting in Kyoto [3], and did so again at APNIC58, as well as via two submissions to the APNIC technical blog. The principal investigator has also shared his learnings about Starlink with colleagues in the WIDE group in Japan at the 2024 WIDE camp [4] and during his sabbatical at IIJ Labs in Tokyo. Japan is comparatively new to Starlink but is using it for emergency and backup communication in remote and disaster areas, e.g., on Noto Peninsula, where much of the terrestrial infrastructure was damage in during the earthquake on 1 January 2024.

During our visit to Tarawa in September 2024, we also visited the NZ and Australian High Commissions there to inform them about our project and to discuss strategies in cable projects. We also engaged to an extent with the local expat community, many of whom are closely linked with development and donor organisations, to inform about the technological aspects and challenges of Starlink and submarine fibre optics communication. We would hope that this has enabled some of them to make more informed decisions about their connectivity options.

Impact Story

The main network providers in Tarawa are Vodafone and OceanLink, who use traditional geostationary satellites to supply Internet service. A submarine fibre optics cable has yet to reach Tarawa and is not expected for another couple of years. This makes Internet access plans relatively pricey. For instance, a typical plan might cost A$2 for 500MB over 24 hours or A$5 for 1GB over seven days.

Unfortunately, many residents cannot afford these rates. As of 2023, Starlink has been accessible in Tarawa through regional roaming plans from Australia or New Zealand, though these remain costly for many ordinary citizens at now NZ$222/month.

The free WiFi service provided as part of this ISIF project has enabled many Tarawa residents and visitors to access the Internet for free. Our data reveals that over 500 unique devices connect to the network per day, over 1400 every week, and over 3000 each month. However, the project does not collect information on user's Internet habits and hence does not have visibility on this data. Any such information we have included in this report is anecdotal and was reported to us by our contacts in Kiribati.

The Ministry of Information Communication and Transport who are hosting us in Tarawa have also benefited significantly from the project. In early 2024, a power surge damaged several key pieces of networking equipment. Wayne was able to connect the Ministry's network to our switch, being the Ministry's sole Internet connection for over a week. The Ministry still relies on our free WiFi service for its visitors, as their Guest WiFi service is still down. We were told that not only are many Ministry staff using the free WiFi as well, it had also been noted that diverse user groups congregated in the vicinity surrounding the Ministry's complex - from school children to wives of seafarers using the system to video call their spouses on port visits overseas, and fishing crew from the tuna vessels anchored off Betio Port coming ashore to use it. The Ministry is now considering organising seating in the area so the users do not have to stand or sit on the ground.

Project Sustainability

Due to our need to pivot, sustainability in terms of funding and community appropriation initially took a bit of a back seat - we expected opportunities to eventuate and appropriation to happen as we implemented in Kiribati and progressed to the grant application phase that will leverage our deployment. This has happened to a good extent - not least because the PhD student involved in the deployment (Wayne Reiher) is on leave from his position as Kiribati's National Director of ICT at the Ministry of Information, Communication and Transport. Wayne will be able to look after the project going forward, but we have also used some of the capacity building component of the grant to upskill staff at his Ministry, and this should further assist in sustainability.

Having partnered with the Ministry, we see opportunities for further collaboration on connectivity issues in Kiribati, but also a potential pipeline for further graduate research students in years to come.

We returned to Kiribati in September 2024 to perform maintenance and upgrades on the deployed system, chiefly to address the aforementioned power issues necessitating the swap of routers, and to install two switches that allowed us to disentangle our two systems in Kiribati again. At the time of writing, Wayne is using a home location research grant to spend several months in Tarawa and continue to support and develop the project there

Project Management

Our hope was to have Wayne Reiher from Tarawa (Kiribati) on board as a PhD student from early 2022 onwards. The NZ government had signalled potential priority entry to postgraduate students and Wayne holds a New Zealand government scholarship. Unfortunately, recipients of scholarships in his programme were then not prioritised as we had hoped. When NZ's borders eventually opened and Wayne was invited to apply for a visa, we initially faced logistical problems - Immigration New Zealand's visa processing capability had all but disappeared during the pandemic and Kiribati only had two flights a month until July, meaning that despite strong support from the NZ High Commission in Tarawa, the application process became drawn out, with documents and medical samples being unable to get where they needed to go. Eventually, we enlisted the help of a NZ Member of Parliament. He kindly put a few questions to the NZ Minister of Immigration on our behalf, and Wayne's visa was granted the day after the Minister's response, with finally Wayne arriving in NZ in October 2022. A nice outcome of this exchange was that we are likely to see hundreds if not thousands of Pacific Islanders previously barred through no fault of their own becoming eligible for New Zealand visas in the near future.